AGitFX is an audio tool that allows you to actively influence the sound of the guitar by moving the guitar. It offers an experimental approach to making gesture-controlled guitars accessible to everyone. It requires a mobile device, Max for Live, Ableton Live, the Android app OSCHookV2 and a laptop. The following video is a demonstration of the audio tool and illustrates the principles:

Links

Audio-Tool as Max-Patch: AGitFX

Download this app on your mobile device: OSCHookV2

Blues Backingtrack: AGitFX-Blues

How it Works?

AGitFX - Paper

The NIME "International Conference on New Interfaces for Musical Expression" offers researchers and musicians the opportunity to exchange ideas on innovative interface design with auditory relevance. Central topics are the interaction between humans and technology, interface design and computer music. Within the annual NIME conference, publications on these topics are selected and published on their website. The aim of these publications is to present projects as well as theoretical concepts with a focus on musical expression. Musical expression describes the ability to reproduce music or sounds on a personal level and is defined by dynamics, phrasing, timbre and articulation.

As part of the module "Music Informatics", students write their own NIME paper. This contains a practical project implementation with an associated description in ACM format. This project is briefly presented below.

Relevance

According to a study by the International Telecommunication Union, since 2015 there have been more mobile phone connections than people living in the world. In 2019, this number will peak at 8.15 billion. This statistic includes all SIM cards with current mobile phone contracts, which are not necessarily in use. This leads to the conclusion that a large part of the world's population owns a mobile phone. What offers a special functionality of these highly developed mobile end devices are the built-in sensors, which every app developer can access. Thus, countless smartphone applications can be found on distribution platforms in which these sensors take over a central function.

The Goal

The problem of this project is to establish the connection between sensor measurement and mu-sical expression. As a solution, the mobile phone transmits the measurement data in real time to the computer. This is attached behind the guitar bridge. The guitar player is thus able to control the effects on the computer by rising and moving. The following figure illustrates the experimental setup:

One possibility for modulating effects is offered by the acceleration sensor or ac-celerometer. This measures the inertial force acting during an increase in speed using a test mass. It can also be used as an inclinometer to measure the relative orientation of an object. Thus, if the mobile phone is mounted perpendicular to the guitar bridge, the movement in the three axes XYZ can be measured. The light sensor brings another option of modulation. Due to the area of the touch hand above the sensor, it casts more shadows at a shorter distance. This type of measurement can only be carried out with sufficient outdoor lighting. In addition, the efficiency of the distances is relative to the intensity of the light sources. The microphone offers another way of modifying effects. This measures the sound pressure in decibels and can be used, for example, by blowing on the microphone.

In addition to the sensors mentioned above, there are countless other measurements and detectors that can be used to set up experiments. In order to carry out the project and to examine the significance of the individual sensors in more detail, I will first limit myself to the sensors mentioned above. The application can be used for all types of guitars. In the written discussion, I will refer to the electric guitar.

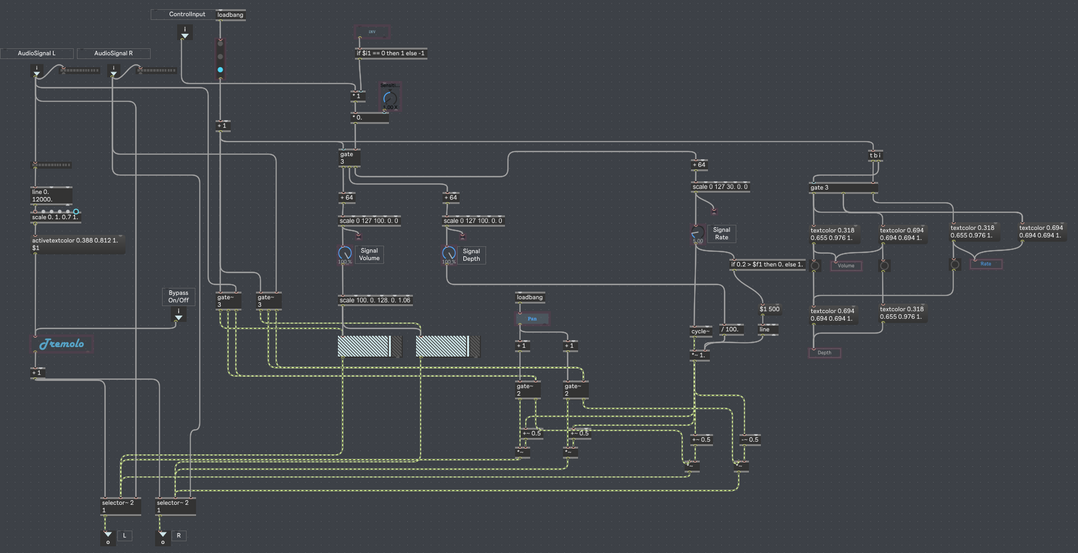

The technical setup is realised with Ableton Live and Max/MSP. These have versatile functions for audio processing. In addition, the construction within the DAW can be directly integrated within an audio production. An Android application is used to read out the sensor data. Optionally, a custom application can be created with Android Studio to meet the requirements. The network protocol "Open Sound Control" serves as the interface between the Max/MSP development environment and the mobile device. This is compatible with most multimedia installations and can communicate directly with the PC via the WLAN with low latency.

Code Snippets

Source Directory

Nime 2021: The International Conference on New Interfaces for Musical Expression. URL: www.nime.org/ (Zugriff:17.05.2021)

Post, Uwe 2018: Android-Apps entwickeln für Einsteiger. 7. Auflage. 53227 Bonn: Rheinwerk Verlag

Tenzer, F. 2020: Anzahl der Mobilfunkanschlüsse weltweit bis 2020, zitiert nach de.statista.com. URL: de.statista.com/statistik/daten/studie/2995/umfrage/entwicklung-der-weltweiten-mobilfunkteilnehmer-seit-1993/ (Zugriff: 17.05.2021)

Wright, Matt 2002: OpenSound Control Specification. URL: web.archive.org/web/20030914224904/http://cnmat.berkeley.edu/OSC/OSC-spec.html (Zugriff: 17.05.2021)

コメント